How Thomas Hoe helps Amazon understand European customers

Why This Area Of AI And Entertainment Is So Powerful

The NSA Warns That US Adversaries Free to Mine Private Data May Have an AI Edge

AI generates high-quality images 30 times faster in a single step

Perplexity’s Founder Was Inspired by Sundar Pichai. Now They’re Competing to Reinvent Search

Liked on YouTube: OpenAI’S Q-STAR Has More SHOCKING LEAKED Details! (Open AI Q*)

A post from Berkeley: Modeling Extremely Large Images with xT

As computer vision researchers, we believe that every pixel can tell a story. However, there seems to be a writer’s block settling into the field when it comes to dealing with large images. Large images are no longer rare—the cameras we carry in our pockets and those orbiting our planet snap pictures so big and detailed that they stretch our current best models and hardware to their breaking points when handling them. Generally, we face a quadratic increase in memory usage as a function of image size.

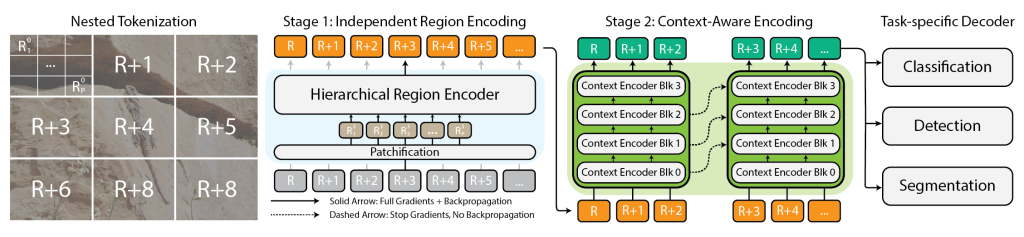

Today, we make one of two sub-optimal choices when handling large images: down-sampling or cropping. These two methods incur significant losses in the amount of information and context present in an image. We take another look at these approaches and introduce $x$T, a new framework to model large images end-to-end on contemporary GPUs while effectively aggregating global context with local details.

Architecture for the $x$T framework.